The social and economic impact of what we know

Hector McNeill1

SEEL

Hayek spoke of the "Knowledge problem" facing central governments caused by ignorance of the details of local conditions anywhere in an economy. This is cited as a reason central planning cannot work.

Macroeconomic management constrains free markets because it is based on direct interventions by government and the Bank of England in taxation, money volumes and interest rates; this is inefficient and ineffective.

The reason for such inappropriate policies is linked to the relative significance we assign to different types of knowledge.

Please note: that this article traverses many necessary topics because of the nature of knowledge. Therefore, to manage the size of the article use is made of footnotes to provide several explanatory details. These are included as links indicated as (Footnote #). To facilitate this operation for the reader, each footnote has a direct link back to the location of the in text footnote link.

|

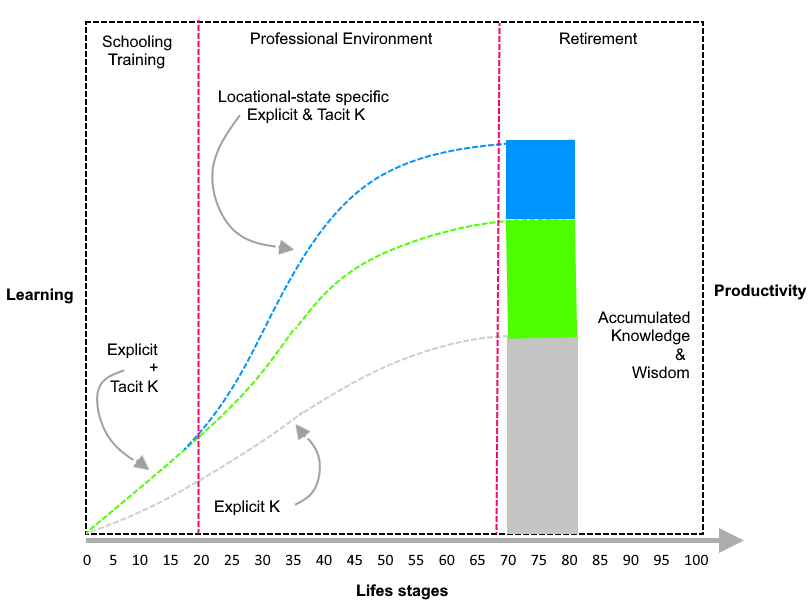

This topic, prompted by an attempt to rationalize Hayek's observation concerning the "knowledge problem", provides an opportunity to elaborate a more ambitious explanation of the relevance of different types of knowledge and to review the social and economic impacts of what we know. This is a modest attempt to elaborate in a practical fashion an approach to the role of types of knowledge in the economy identified by many economists and engineers including Theodore Wright (1936) revealing the learning curve, Nicholas Kaldor (1957) and Robert Solow (1957) on the role of technology in economic growth, Kenneth Arrow (1962) concerning learning by doing and Gordon Moore's Law (1965) concerning the trends in logic circuit density, and many others.

The Real Incomes Approach policy is completely decentralized with the policy instruments being controlled by economic actors in an operation that stabilizes or augments real incomes. All policy instruments have a Real Incomes Objective (RIO) as the principal policy target. Real incomes embed what are often disparate and separate targets under conventional economic policies such as interest rates, inflation, money supply, unemployment and balance of payments. RIO is completely supply side and controlled by the economic constituents and thereby avoids what Hayek identified as a "knowledge problem", since the policy does not involve any central government or central bank interventions in any markets.

An important aspect of achieving this is the optimization of the combinations of tacit and explicit knowledge (Footnote 2). There is however an additional dimension which can help catalyze an even more effective level optimization leading to less risky productive investment and innovation. This this is locational-state knowledge gained from applying Locational-State Theory (LST) (Footnote 3). The question of knowledge is central to the efficient operation of the economy and a harmonious state of affairs on the social front.

Besides Hayek's perspective on the "Knowledge problem" published in 1945. Ludwig von Mises in 1920 raised the same issue as did Ronald Coase in 1960 with respect to the outcome of attempting to apply centralized decisions, such as Arthur Pigou's tax proposals. These were designed to discourage activities with adverse side effects such as environmental pollution or overloading public health care as a result of the sale of tobacco products and other types of negative externality. Although people were swayed by Coase's and Mises logic pointing to a "calculation and knowledge problem", Pigovian taxes are prevalent today in the form of carbon emissions tax (Carbon trading) and taxes or surcharges placed on plastic bags. These taxes are supposed to redistribute those costs back to the producer and/or user that generate the negative externality. What often happens is the trader bags to money. This does not mean von Mises, Hayek and Coase are wrong, indeed they are probably right given the general lack of technical oversight on the application of carbon trading signifying that this is probably not working as the general population expect. The fact that is is heavily promoted by financial intermediaries and banks is enough to raise suspicion on this score.

In all cases, however, the "Knowledge problem" although in these cases it is related to externalities caused by business, is mainly aimed at externalities caused by faulty central government policies that adversely impact society.

Based on the work leading to the real incomes approach and the subsequent development of Locational-State Theory, Hayek's "Knowledge problem" is not generic but actually relates to a specific type of knowledge and, indeed, policy. This probably holds for von Mises and Coase's positions also.

Centralized policies are best applied to bringing up the levels of various conditions of the social constituency to some minimum acceptable standards. The post-war years saw many of Beveridge's welfare reforms introduced for this reason. Hayek views on social policy are to be found in his "The Road to Serfdom" in Chapter 9 under the title of "Security and Freedom". He expresses his support for (Footnote 4),

"...the certainty of a given minimum of sustenance for all," that is, for "some minimum of food, shelter, and clothing sufficient to preserve health and the capacity to work," as well as for state-assisted insurance against sickness, accident, and natural disaster." |

There is no reference to any " Knowledge problem" here. This is probably because he refers to matters affecting the whole social constituency irrespective of their location. However, when it comes to policies to advance the performance of the economic constituents, the specificity of each circumstance requires that any information used to plan policies, takes into account the principle of locational-state knowledge of the state of variables of interest associated with their location in space and time. The main characteristic of economic activities is their heterogeneity. Thus at any time the economy is made up of companies with different ages, levels of competence and experience of work forces and management, locations in geographic space, exposed to local logistics, using different forms of technology and techniques applied, having different sizes of order books, cash flow and profitability. Under such circumstances, it is self-evident that central government interventions in financial markets through interest rates and money volumes or in the compensation dimensions of the labour market through corporate ( Footnote 5) and personal taxation, there will be significant differentials in the degree to which each company wishes, or indeed, can respond to their benefit in an environment where policy is imposing changes. As a result, the reality is that policies generate winners, losers and those in a neutral policy-impact state. This reality can make the operation of Small & Medium Sized Enterprises (SMEs) difficult as they attempt grow, initially on the basis of low margins, to consolidate their operations. Conventional policies, by default, have a tendency to create the situation that provides advantages to specific groups. This is more than apparent in the benefits accruing to asset holders as opposed to wage-earners, under quantitative easing (QE). Indeed, Hayek has also stated that, "...policies which are now followed everywhere, which hand out the privilege of security now to this group and now to that, are ... rapidly creating conditions in which the striving for security tends to become stronger than the love of freedom." |

FreedomHayek suggests that freedom is impaired. It is therefore necessary to assess what he says here about freedom being abused. Freedom has many definitions but an economic and constitutional sense the degree to which we benefit from freedom is, to a large degree founded on what we know. To make sense of this statement it is necessary to establish how centralized "policies" can support a concept of freedom. Thus, the public good of legislation that applies to all to guarantee an individual's ability to pursue their own objectives without constraint. At the same time it is also there to ensure that constituents do not pursue their objectives at the expense of other constituents. If we consider legal frameworks to be policy based, since they are normally supported by regulations consisting of sanctions and enforcement, it is plain that this is another example where "centralized policy" is beneficial. The only "Knowledge problem" is to monitor people's behaviour. However, because of the existence of the legal framework, anyone prejudiced by the behaviour of another can seek redress via the indicated regulatory authority that oversees sectors. So depending upon the level of awareness of individuals with respect to expectations of behaviour under the law, as well as the provisions that permit individuals to react, there is no significant "Knowledge problem" On the other hand, where regulations and sanctions for non-compliance are overseen by lightweight regulations very much under the control of those regulated, such as is the case in financial regulation, abuse of constituents by financial dealings is "deterred" by such light weight sanctions, that these simply become an irrelevance in terms of regulating the market. When applied the become simply a marginal cost of doing business. However, if the very legislature that enacts laws and regulations "defending freedom" is also carrying our policies that, in themselves, are the source of negative externalities that have differential impacts on the ability with which constituents can pursue their objectives, then this is an abuse of constitution. What is worse is that because this is imposed by government there are less obvious means for gaining redress other than voting the government out. But when the main opposition follows the same economic logic upon which to base policies the situation becomes a significant constitutional issue. It would seem that Hayek's statement is a rational one.

The real knowledge problemIn is notable that Hayek, as someone who is in support of specific minimum standards of welfare and who is also aware of the knowledge problem, does not reflect on how a nation needs to also establish minimum standards for people to be aware of knowledge as well as have basic capabilities in using knowledge effectively and efficiently. I refer here to the basic consideration of what are adequate standards for general education. It is therefore interesting to note that Hayek did not include general standards of basic education in his list of "approved" targets for welfare. At the moment, general education in this country is not fit for the purposes of a population living in a world of advancing technologies, a growing climate change problem and a need for this country to address the lack of a manufaturing sector to reduce a persistent balance of payments problem in goods. We do have, at the moment a serious problem associate with state-funded provisions and subject matter in the general education system which has a serious "knowledge problem" associated with what types of knowledge are transferred and how, within the system. However, it is notable that one of the areas where, if structured to assist the population advance the conditions of the country in an appropriate manner, general educational provisions, provided through government funded institutions, can have a major impact on supporting any policies, including RIO-Real Incomes Objective policies and assisting SMEs secure a more effective growth. If this is well conceived and executed the appropriate education policy would not have a " knowledge problem" but rather it can contribute to individual knowledge constituting an important liberating factor in helping people solve economic problems. In this context there are four distinct types of knowledge whose role has implications for the standards of general education in this country and how we as a community manage our personal and economic affairs. These are:

- General:

- Basic explicit knowledge

- Basic tacit knowledge

- Locational-state:

- Specific explicit knowledge

- Specific tacit knowledge

General, basic explicit knowledgeIn general the education system in this country, be this state schools, private schools including public schools and academies, is highly theoretical and consists of general and basic explicit knowledge. Under the 1944 Butler Education Act of 1944 there were technical schools which one might consider to teach general and basic tacit knowledge because they included training in some of the trades including masonry, wood and metal work. Although well meaning, the Butler Act set in stone a particular intellectual caste system where grammar schools were for students with academic aptitude and technical schools limited to a "vocational" preparatory function catering for those individuals who were "less academic" but "good with their hands". General, basic tacit knowledge Tacit knowledge and the economy

Many economists and engineers have presented copious evidence to establish that most economic growth comes from the development and application of tacit knowledge acquired by learning by doing.

It is the prime source of innovation in techniques and technologies and productivity.

See: McNeill, H. W.,"Technology, technique & real incomes", Charter House Essays in Political Economy.

Wright, T., "Factors Affecting the Cost of Airplanes" , Journal of Aeronautical Science, Volume 3, No.2, pp. 122-128, 1936.

Kaldor, N., "A Model of Economic Growth", The Economic Journal, Volume 67, Issue 268, Pages 591–624,

1957.

Solow, R. M., "Technical change and the aggregate production function". Review of Economics and Statistics. 39 (3): 312–20 ,1957

Arrow, K. J., "The economic implications of learning by doing". The Review of Economic Studies. Oxford Journals. 29 (3): 155–73, 1962.

Moore, G. E., “Cramming more components onto integrated circuits”, Electronics, Volume 38, Number 8, April 19, 1965

6 Nagy, B., J. Farmer, J. D., Bui, Q. M., Trancik, J. E., "Statistical Basis for Predicting Technological Progress", 2012, Santa Fe Institute, St. John's College, and Engineering Systems Division, Massachusetts Institute of

Technology, Cambridge, MA, 02139, USA. 2012

|

|

|

Although only a few technical schools were established, some benefited from headmasters with a more socially inspiring vision of the role of technology in society in advancing wellbeing and economic stability. They considered their remit to be to provide a broader education so as to pass on to students a comprehensive understanding, not only of theory but also to gain practical exposure to a broader range of applied technologies and techniques in their application so as to gain a deeper appreciation of the mechanics of how the economy works. The mission, as it were, was to help create individuals with a comprehensive and practical understanding the role of science and technology in society. As a result of these efforts some technical schools began to compete with the grammar schools, sending pupils on to university and in some cities becoming the first choice of parents. The problem with this system was the selective so-called eleven plus (11+) examination that was set at too early an age. This ignored a well established " late developer" or " slow learner" phenomenon. The local authority of the City of Portsmouth in Hampshire, developed a solution to this injustice ( Footnote 6). In general terms, the 11+ acted as a barrier for a large number of capable or "intelligent" individuals in securing the "benefits" of selective educational establishments such as the grammar schools. So for many, the 11+ was considered to be a barrier to "social mobility". It is not as if informed educational specialists were not aware of the "late developer" issue which was proven to be a cause of waste as far back as 1900 when Maria Montessori joined the "medico-pedagogical institute" where the children were drawn from the asylum and ordinary schools but considered to be "slow learners" or even "uneducable" due to their "deficiencies". Some of these children later passed public examinations given to so-called "normal" children. The problem was in the unapplied nature of teaching which Montessori corrected through a slow adjustment in didactic methods. Solving the social mobility issue?In 1965, Athony Crosland introduced comprehensive education and got rid of the 11+ for entry into "comprehensive schools". One of his hopes was to increase social mobility by eliminating the 11+. What was not fully appreciated was that the comprehensive schools were, and remain, completely academic or theoretical grounded in explicit knowledge. To gain social mobility there is a need to provide a general education that helps children develop a good understanding of the application of theory, not as yet additional theoretical concepts, but through being provided with the opportunity to being exposed to a range of practical pursuits. To understand applied pursuits there is a need for people to " get their hands onto" the subject matter and to understand what is involved in producing something tangible up to some minimum standard of practice. This assists learners understand that there is considerable amount of ordered discipline and method involved in carrying out practice tasks well. As a result of such exposure it is more likely that individuals will come across subjects that they find appealing so as to establish the foundation of a future pathway towards a fruitful and productive life driven by a sense of vocation. It is this type of educational provision that can create more opportunities for social mobility.

Imitation & simulationPractical teaching methods whether concerning explicit or tacit knowledge development is initiated with the learner imitating or copying what the instructor says or, even better demonstrates e.g. writing. So the learner starts off by repeating what they are taught and perfect whatever they are doing through repetitive simulation e.g. multiplication tables. In the formal sense a simulation is the imitation of the operation of a logical operation such as maths or grammar or a real-world process or system over time. Besides being the most effective way to pass on knowledge, today simulation is used in complex decision analysis using analytical models or on the tacit side for training of airline pilots and workers in hazardous environments such as fire personnel. A significant aspect of handling "late developers" was in most case simply allowing them to catch up in their own time, while in other cases it was a matter of organizing more engaging methods of instruction. In reality, the more practical and applied the tasks, the quicker children and adults, learn to master techniques as well as comprehend the theory and logic of what they were doing. Purely theoretical expositions, for many children and adults, are sterile and difficult to relate to and follow. Therefore the theoretical teaching approach holds back the majority of people. The ludicrous reality, however, is that the system judged those attaining a specific standard by a specific date to be more intelligent that those attaining the same or better grades, if provided with the opportunity, two or three years later.

Embracing complexityThere is a tendency in scientific investigations to attempt to simplify things in order to isolate the effect of some variable of interest. This is a common practice in statistical design of experiments. In economics it is the " ceteris paribus" (all other variable remaining fixed) condition of analyzing the effect of a single variable on some output by freezing all other known variables and testing what happens when a single variable is input across a range of values. Most situations are more complex than this and therefore there is a need for us to arrive at a state of affairs where we have a reasonable idea of the effects of the interactions of all critical variables on an output. ( Footnote 7) The Perception-Semantic complexOne of the critical constraints on the transmission of knowledge is the constraint imposed by the individuals in the knowledge "supply chain" that links together some event or state of affairs through the levels of perception of the individual observing the event and through the interpretation by that individual of what was observed and then the terminology or terms used by that individual to describe the event to someone else. This perception-semantic complex is important because it is the initial step in disseminating knowledge. If there are problems with the perception-semantic complex then the value of information transferred will vary significantly ( Footnote 8). Reflection and slow learningThere can be a good deal of misinterpretation associated with the assessment of individuals who do not pick up on a concept as quickly as others may have done. Some consider the magical test of the Intelligence Quotient (IQ) to be a clean and neutral way to assess intelligence. However an ability to gain high or low marks in an IQ test is less related to intelligence than to an individual's culture and personal locational-state history ( Footnote 9). When this is understood, it becomes more apparent that the "slow learner" might, in reality be highly intelligent. Because of their concern with other parts of the puzzle, of which they are aware, there is often a hesitancy associated with an internal effort to rationalize "new additional information". This is not a sign of lack of intelligence but rather evidence of a broader awareness of related information. And yet, these individuals can end up with average or even low IQ test scores ( Footnote 10). Attitudes often get in the wayThere is a general problem of a lack of engagement between people, especially between those who possess some knowledge or skill and not sharing this with others. This is an attitude issue in the sense of a feeling or opinion about something or someone which elicits a particular way of behaving. This can affect inter-personal relations as well as in the professional working environment. For example, sometimes middle management are not particularly concerned with the practical difficulties facing workers in poorly set out production facilities. It is up to the workers to sort things out and if they don't someone's job could be on the line. The attitude is often, " It is not you job to reason just produce what is needed." This raises the sense of the Tennyson lines referring to the suicidal Charge of the Light Brigade at Balaclava, which was a case study of management incompetence and a dismissive attitude with respect to the lives of the men concerned and then adjusting the perception of the outcome by reclassifying this example of an imposed demented subservience, as bravery. | "Their's not to reason why, Their's but to do and die, Into the valley of Death" |

One of the reasons for the lack of engagement of management with shop floor practicalities can be linked to management's own incapacities resulting from having passed through an educational system that cultivates an exaggerated respect for theory while providing no useful experience in applied pursuits. A dangerous resulting attitude is to consider practical pursuits involving the application of hand and eye coordination and the development of physical skills as being inferior. Sometimes this micro-hierarchical mentality also creates a resentment of shop floor people attempting to explain things to managers who do not have an adequate grasp of the topic in question. Without wishing to be dramatic the significance of this is that the lack of attention to feedback from shop floors in many small companies heralds the eventual demise of the companies concerned because of their lack of attention and concern for efficient operations and productivity. It is notable that the government stresses the need for more expenditure of basic research and development assigning large amounts of funding while more practical efforts to improve the general education of the whole population receives less attention. It is notable that Thomas McNeill, who was the headmaster of the Portsmouth Technical High School, was selected, as a child, to pass through "The Montessori Stream" as part of an evaluation of the method, at his school and recalls meeting Maria Montessori when she visited his school in Scotland. Amongst the salient points of this experience he recalls that the instruction environment was pleasant and, above all, they were encouraged to always remain considerate of fellow students. In this context children were encouraged and assisted others to understand and to dominate tasks. Not understanding something was considered to be an issue of poor communication and not a defect on the part of those having difficulty. A key factor in such a level of consideration and mutual collaboration is the ability to understand the particular difficulties being faced by another person by making the effort to find out what this is. This level of consideration often appears to "slow things down" but in reality it moves a whole body of colleagues forward at a steady rate. Thomas McNeill also recalled that, in relation to specific conceptual tasks, he and his Montessori stream colleagues seemed to have gained levels of perception that did not seem to be apparent in his colleagues who had followed the "normal stream". He noticed this when following the streaming exercise, he and his colleagues rejoined a "normal class". For example, for some reason, they were more adept at predicting correctly conic sections and handling 3D concepts; he was not sure why this was but it would seem to have been related to the applied nature of Montessori teaching methods.

The SME problem and its potentialMost people in the United Kingdom are employed by Small & Medium Sized (SME) companies. Supporting small and medium-sized enterprises (SMEs) to succeed is the stated core aim for the UK Department for International Trade (DIT). Accounting for over 99% of all UK businesses, and approximately 50% of all private sector employment and private sector turnover, SMEs are the main potential source for economic growth. Smaller SMEs face a problem of dedicating time to the guidance of employees to build up of tacit knowledge. Having an intake that has passed through an educational system possessing a good proportion of general tacit knowledge is a vital component of any system designed to help accelerate the speed of the gain in overall corporate productivity and raising standards to secure sustained export performance. In a recent interview Dr. Richard Werner described the German " Hidden Champions" of something like 1,500 SMEs that are leading exporters and recently Germany with a population of less than 100 million had an export trade equal to that of China with a population of 1.4 billion ( Footnote 12). Richard Werner has also drawn attention to the fact that the other critical support enjoyed by German SMEs to arrive at this impressive state of performance has been that the German banking system is dominated by 1,500 community banks. This means that 80% of German banks are not-for-profit, which has strengthened the German economy for the past 200 years. A banking system consisting of many small banks is also far less prone to boom-bust cycles and it creates more jobs per given amount of loan than large banks. Thus community banks also result in a more equal income and wealth distribution. The USA has around 300 or so equivalent "Hidden Champions"; the UK has a long way to go. The summary so farExplicit knowledge is the way we record and communicate facts in words, picture, tabulations, graphs, mathematical equations, decision analysis models and the like. Explicit knowledge is fairly easy to transmit from one person to another and the technique used to communicate can help accelerate transmission of this knowledge. Tacit knowledge is internalized knowledge and results from carrying out tasks and gradually improving competence the more tasks are repeated (learning curve effect). Tacit knowledge cannot be transferred from one person to another but each person acquires tacit knowledge and a level of competence. This is because the form of tacit knowledge also combines mental, coordination and motor capabilities associated with carrying out tasks. It is difficult to "accelerate" the acquisition of tacit knowledge. Explicit and tacit knowledge are set out as two different axes in the diagram below and there are different "combinations" of tacit and explicit knowledge set out on the "floor" of the diagram as a graphic coordinates. There are two extremes of the combination of low explicit and tacit knowledge as point "a" and another one that combines high tacit and explicit knowledge at point "c". In terms of decision making it is to be expected that the decisions taken in the "c" circumstance are likely to be better informed and therefore less risky that decisions based on the weaker combination at point "a". Better quality decisions on operations as well as decisions leading to successful change through innovation are more likely to result from situations where mode explicit and tacit knowledge has been accumulated by those involved in the decisions.

Locational-state specific explicit & tacit knowledgeLocational-state explicit specific explicit and tacit knowledge is the combination of data information a records and the development of human capabilities associated with locational-state characteristics of different geographic locations. It is based on an extension of explicit knowledge in the sense of being more detailed information than is normally collected and which adds additional information of utility in decision analysis. In the discipline of decision analysis a decision is defined as an irrevocable allocation of resources to a specific course of action involving the application of physical, human and financial resources. Therefore the more precise and relevant the information used the more effective will be the application of the state-of-the-art in techniques associated with the current status of tacit knowledge.

By far the best example is in the field of natural resources, ecology, agriculture and climate change (Footnote 13). However it does have applications throughout the economy. As a result it is to be expected that productivity and the rate of practical innovation will be enhanced by collecting and making more effective use of locational-state knowledge as shown conceptually below.

The introduction of a more refined specification and collection of relevant data by decision makers can be secured as a result of locational-state considerations so as to increase the explained analysis of variance in data and thereby achieving a more refined analysis required for decision making. Naturally the explicit knowledge contains this more detailed content and the local tacit knowledge will embed the techniques developed to handle local circumstances, decisions based on this more detailed knowledge is likely to result in improved decisions over those that did not make use of locational-state data. In this way the "knowledge problem" remains localised and, as a result, centralised policy needs to be adaptive enough to take advantage of this rather than constrain these advantages. RIO-Real Incomes Objective policies are designed to achieve this benefit. The rise in productivity can be illustrated conceptually in the diagram below.

A concise summary of the social & economic impact of what we know

|

1 Hector McNeill is director of SEEL-Systems Engineering Economics Lab

Footnote 2: Explicit & Tacit knowledgeElton Mayo wrote on this topic extensively (Reference: Mayo, E., "The Social Problems of an Industrial Civilization", Routledge & Kegan Paul Ltd., London, 148pp. 1949) but did not use the words "explicit" or "tacit". Therefore, in the extract below the tags [tacit] and [explicit] are inserted in the text to create a correct association with these terms. More significant sections are emboldened. "A simple distinction made by William James in 1890 has all the significance now that it had then; one can only suppose that its very simplicity has led the universities to brush it aside as obvious, which is true, or as of small account, which is not true. James pointed out that almost every civilized language except English has two commonplace words for knowledge-connaitre and savoir - knowledge-of-acquaintance [tacit] and knowledge-about [explicit]. This distinction, simple as it is, nevertheless is exceedingly important; knowledge-of-acquaintance [tacit] comes from direct experience of fact and situation, knowledge-about [explicit] is the product of reflective and abstract thinking."

"Knowledge derived from experience [tacit] is hard to transmit, except by example, imitation, and trial and error, whereas erudition (knowledge-about) [explicit] is easily put into symbols-words, graphs, maps. Now this means that skills,[tacit] although transmissible to other persons, are only slowly so and are never truly articulate. Erudition [explicit] is highly articulate and can be not only readily transmitted but can be accumulated and preserved. The very fact that erudition (logic and systematic knowledge) can be so easily transmitted to others tends to prejudice university instruction in the social sciences heavily in its favour."

"Physics, chemistry, physiology have learned that far more than this must be given to a student. They have therefore developed laboratories in which students may acquire manipulative skill and be judged competent in terms of actual performance. In such studies the student is required to relate his-logical knowledge-about to his own direct acquaintance with the facts, his own capacity for skilled and manipulative performance. James's distinction between the two kinds of knowledge implies that a well-balanced person needs, within limits, technical dexterity in, handling things, and social dexterity in handling people; these are both derived from knowledge-of-acquaintance. In addition to this, he must have developed clinical or practical knowledge which, enables him to assess a whole situation at a glance. He also needs, if he is to be a scientist, logical knowledge which is analytical, abstract, systematic-in a word, the erudition of which Dr. Alan Gregg speaks; but it must be an erudition which derives from and relates itself to the observed facts of the student's special studies".

"Speaking historically, I think it can be asserted that a science, has generally come into being as a product of well-developed technical skill in a given area of activity. Someone, some skilled worker, has in a reflective moment attempted to make explicit the assumptions that are implicit in the skill itself. This marks the beginning of logico-experimental method. The assumptions once made explicit can be logically developed; the development leads to experimental changes of practice and so to the beginning of a science. The point to be remarked is that scientific abstractions are not drawn from thin air or uncontrolled reflection: they are from the beginning rooted deeply in a pre-existent skill. At this point, a comment taken from the lectures of a colleague, the late Lawrence Henderson, eminent in chemistry' seems apposite:

“In the complex business of living, as in medicine, both theory and practice are necessary conditions-of understanding, and the method of Hippocrates is the only method that has ever succeeded widely and generally. In the first element of that method is hard, persistent, intelligent, responsible, unremitting labour in the sick room, not in the library: the complete adaptation of the doctor to his task, an adaptation that is far from being merely intellectual. The second element of that method is accurate observation of things and events, selection, guided by judgement born of familiarity and experience, of the salient and recurrent phenomena, and their classification and methodological exploitation. The third element of that method is the judicious construction of a theory - not a philosophical theory, nor a grand effort of the imagination, nor a quasi-religious dogma, but a modest pedestrian affair . . . a useful walking-stick to help on the way. . . . All this may be summed up in a word: The physician must have first, intimate, habitual, intuitive familiarity with things; secondly, systematic knowledge of things; and thirdly, an effective way of thinking about things.”

Reference: Mayo, E., "The Social Problems of an Industrial Civilization", Routledge & Kegan Paul Ltd., London, 148pp. 1949.Return to launch point in text

Footnote 3: Locational-State TheoryLocational-state theory (LST) brings together much that is already known concerning the relationships between the values of variables in different location according to geographic or time displacement. Indeed, on a personal basis, the elements of Locational-State Theory were discussed with my father Thomas C. McNeill in the context of General Semantics and Semantics during the early 1960s and then in post-graduate university work in 1968. However, it was only in 1985 working on decision analysis issues that I coined the phrase "Locational-State" to express more concisely what LST concerns i.e. the state of variables according to their location in time and space. LST did not start off as a theory but more as a method to specify data requirements in support of decision analysis for companies or voters. However, over time is became evident that the method can be applied to most situations and sectors resulting in the method becoming a generally applicable theory, thus, LST. The early applications were to be found in natural resources and ecology and agricultural production in particular (see in footnote ). The two most important derived benefits of LST are:

- A better understanding of incomplete and complete datasets

- Ability to augment explained variance component over the unexplained variance component in datasets

The specific economic significance of LST is elaborated in another footnote ( Footnote 13) that is linked to the section covering " Locational-State specific explicit and tacit knowledge".

Return to launch point in text

Footnote 4: Hayek's observations on necessary welfare provisions... These details are to be found in Albert Hirschman's "The Rhetoric of Reaction". Return to launch point in text

Footnote 5: Corporate tax

Corporate taxation is determined by a specific code of practice in drawing up accounts. Corporate ownership income and shareholder dividends come from net profits as well as share allocations. Workforce wages are classified as a cost item. Operations and accountancy practice provide far more leeway for managing management and ownership compensation than in workforce wages and as a result under financialization and the close relationship of executive bonuses to share values the participation of wages in overall cash flow has been declining while profit shares have increased. Any variations in corporate taxation impact wages negatively. A rise in corporate taxation squeezes wages while falls in corporate taxation is often an opportunity to raise leverage to purchase own shares and raise their value having the same negative impact on the prospects for wage adjustments.

Return to launch point in text

Footnote 6: The plight of "late developers"The intellectual development of children between the ages of 10 and 16 is particularly rapid and individuals vary in their rate of advance. Early attainment is not a sign of superior intelligence since later developers can excel and surpass the early qualifiers. As a result a body of individuals, who did not pass the examination at 11 years of age ended up in normal technical or modern schools. This was a dreadful waste, not because they did not enter grammar schools but because most technical and modern schools were not equipped to accommodate students by teaching useful things.

Those who passed the 11+ faced a curriculum wholly dedicated to theoretical concepts and taught on the basis of "chalk and talk". This was also a waste in the sense of merit being gained purely on the basis theoretical tests.

As it transpired, secondary technical schools only developed effectively in places such as Portsmouth in Hampshire where the local Technical High School became one of the best schools in the city. Thomas Cragg McNeill (1910-2002), the headmaster of the Technical High School in Portsmouth, had dedicated time to reviewing how other countries handled technical education such as Germany. He was successful in expanding the enrollment of the school from a combined enrollment from 200 in 1945 to over 1,000 by the time he retired in 1975. By that time it had began to compete effectively with grammar schools as the first choice of parents in the city. During McNeill's time the school taught all of the subjects taught at grammar schools but in addition included surveying, technical drawing and geology but also major additions were laboratories including metal foundries, wood and metal work, pottery, art, test beds for motors and pumps, chemical and physics and electrical labs, an observatory and even boat building producing the school's own class of sailing boat. The school was the first in the city to gain a computer donated by Basil de Ferranti and it had by far the largest school orchesta in the city. Many of the devices used were designed and built by students including the astronomical telescope and dome. The remarkable aspect of McNeill's dedication to this project and accomplishment was that the Technical High School was a state school, administered by the local authority.

McNeill studied the limitations of having children sit intelligence tests at 11+ a single point in time when the acceleration in children's development was particularly rapid between 10 an 16 years of age meaning slightly slower developing children failed the 11+. McNeill proposed and helped introduce a system in Portsmouth to monitor border-line cases of children who did not pass the 11+ so that at the age of 12, 13 and sometimes later they could transfer to a grammar school or the Technical High School.

Return to launch point in text

Footnote 7: Complexity

In 1925, R. A. Fisher published a paper, "Statistics Methods for Research Workers", which reviewed the development of statistics up until that time. Fisher describes how the efforts in statistics of population were directed towards determining the average. No particular effort had been made to analyse the degree to which populations deviated from the average. Indeed, Fisher made significant contributions to the practical application of statistics by establishing methodologies which became a standard approach to the determination of the significance of experimental results. This was based upon a knowledge of the shape of a population curve and the variance about the mean, or average. If the response of say, a crop, to some input fell outside what would be a reasonable expectation of the normal variation, then results could be judged to be significant (at different degrees of confidence). A convenient way, perhaps too convenient, to provide a shorthand description of a population became the declaration of the average and the observed coefficient of variance.

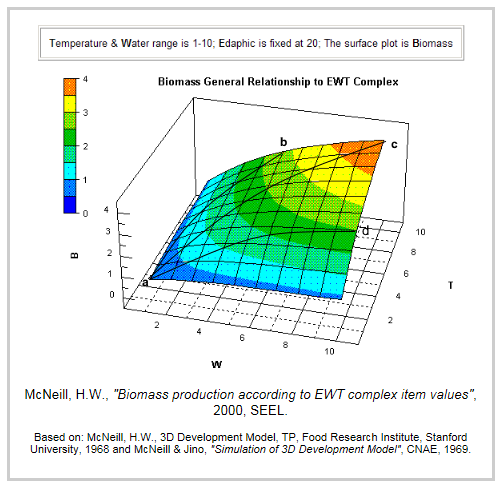

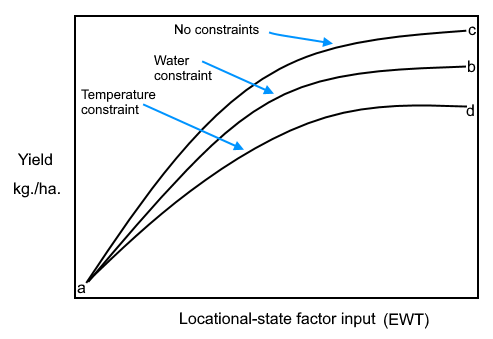

Fisher's work, and especially that relating to experimental design and the analysis of variance (ANOVA), became widely applied in the field of agricultural research and constituted an important contribution to the methods of experimental design.

In agricultural experimentation, performance trials and genetic selection work the convenience of such methods has, to some extent, encouraged work to become too focused on detecting the immediate interrelationships between the so-called explained variance attributable to the factors of interest to the researcher. Most other influences often became ignored or ended up in the residual data called "unexplained variance". Indeed, in many cases, what we refer to as the EWT complex, (Edaphic [soil], Water and Temperature) ends up in the unexplained variance category when it remains a fundamental determinant of crop productivity and yield responses. Naturally there are many cases where this is not true but rather than become involved in a detailed analysis of the justification for such a statement it is more productive to simply confirm that in workshop series organized by SEEL (Systems Engineering Economics Lab) the EWT complex has become the central factor in their approach to decision analysis which can include the design of experiments and surveys in the agricultural sector which include an extended typology of relevant determinants to crop yield. This has become an important element in analysis of the impact of climate change on food production.

Fisher, although the designer of methods which have simplified experimental design and their analysis was completely aware of the limitations inherent in such an approach. Thus:

"No aphorism is more frequently repeated in connection with field trials, than that we must ask Nature few questions, or, ideally one question at a time. The writer is convinced that this view is wholly mistaken. Nature will best respond to a logical and carefully thought out questionnaire, indeed, if we ask her a single question, she will often refuse to answer until some other topic has been discussed"

R. A. Fisher (1926)

Return to launch point in text

Footnote 8: Perception-Semantic complex

The Encyclopedia Britannica has a reasonable summary of Korzybski's work under the title of "General Semantics":

"General semantics, a philosophy of language-meaning that was developed by Alfred Korzybski (1879–1950), a Polish-American scholar, and furthered by S.I. Hayakawa, Wendell Johnson, and others; it is the study of language as a representation of reality. Korzybski’s theory was intended to improve the habits of response to environment. Drawing upon such varied disciplines as relativity theory, quantum mechanics, and mathematical logic, Korzybski and his followers sought a scientific, non-Aristotelian basis for clear understanding of the differences between symbol (word) and reality (referent) and the ways in which words themselves can influence (or manipulate) and limit human ability to think.

A major emphasis of general semantics has been in practical training, in methods for establishing better habits of evaluation, e.g., by indexing words, as "man1," "man2," and by dating, as "Roosevelt1930," "Roosevelt1940," to indicate exactly which man or which stage of time one is referring to.

Korzybski’s major work on general semantics is Science and Sanity (1933; 5th ed., 1994). The Institute of General Semantics (founded 1938) publishes a quarterly, ETC: A Review of General Semantics. |

|

|

The question of translating facts observed into a logical message is complex as police will know when they take notes from people who have witnessed some event they are investigating. Getting to the truth of the matter, the basic facts can be difficult.

William James observed that,

"Truth is what happens" the problem comes when someone is required to explain "what happened". The work of Alfred Korzybski and his " General Semantics" aimed to establish an approach to the interpretation of events in order to arrive at a rational explanation. Although an important topic which I studied and discussed this in some detail over several years with my father, Thomas C. McNeill, where we would refer to his very dog-eared original copy of Korzybski's " Science & Sanity". To some degree we came to the conclusion that his approach, although an important topic had further to travel to come to a conclusive results. In essence, as captured by the Encyclopedia Britannica, and paraphrasing, General Semantics aims to establish a clear understanding of the differences between symbols, words and explicit knowledge the reality they describe or referent. A referent can be a person, object or idea that the words, phrases refer to. In addition, GS is concerned with the analysis of the ways in which the words and expressions used can influence, manipulate and limit human ability to understand and think coherently. The constraint in the system is, of course, the human element's capability of translating personal perceptions, already hampered by limitations in knowledge, into a clear message but then the problem becomes how perceptive is the individual? I have used Korzybski's concepts in the development of Locational-State Theory. For example he referred to all events being " time-bound" and I added to this geographic " space-bound". I have applied decision analysis models largely based on Ronald Howard's Decision Analysis theory which makes extensive use of models or maps. One of Korzybski's cautions was, " The map is not the territory" i.e. models can be used to guide decisions but outcomes are unlikely to be as predicted by the model. This is why the locational-state theory approach is designed to bring models closer to reality. Locational-state is an attempt to address complexity or heterogeneity. In this context it is important to acknowledge the contribution of the Object Oriented approach to the construction of simulation models. Object Oriented Approach to complexityAccepting that the real world is characterized by eccentricity, i.e. heterogeneity and diversity, we have to accept that much is not the same, in fact, in practical terms, most things are different. Heterogeneity and diversity are the order of the day. The origins of so-called object-orientated programming are to be found in an attempt to address this very issue. However, after almost 50 years of development, there remain gaps in the coding systems (languages and/or scripts).

In 1957, Kristen Nygaard started writing computer simulation programs and at some point recognized the need for a better way of describing the heterogeneity in the description and operation of any system. This quest ended up with the object orientated programming approach which now dominates programming and scripting. The Simula languages were developed at the Norwegian Computing Center, Oslo, Norway by Ole-Johan Dahl and Kristen Nygaard. Nygaard's work in operations research in the 1950s and early 1960s created the need for precise tools for the description and simulation of complex man-machine systems. In 1961 the idea emerged for developing a language (explicit knowledge) that both could be used for a system's description (for people) and for system prescription (as a computer program through a compiler). Such a language had to contain an algorithmic language, and Dahl's knowledge of compilers became essential. The SIMULA I compiler was partially financed by UNIVAC and was ready in January 1965. SIMULA I quickly got a reputation as a simulation programming language, but turned out, in addition, to possess interesting properties as a general programming language. When the inheritance mechanism was invented in 1967, Simula 67 was developed as a general programming language that also could be used in specialized domains and, in particular for simulating systems. Simula 67 compilers started to appear for UNIVAC, IBM, Control Data, Burroughs, DEC and other computers in the early 1970s.

To some degree the original quest of using OOP for simulating real world circumstances was overtaken by software “linguists” and “logicians” who were more interested in this new “paradigm” and the emphasis seemed to veer from the imperative of handling real world simulation towards ways and means to perfect the logical and programming language syntactical coherence. As a result, many younger programmers who have appeared since the 1980s are often unaware of the powerful simulation capabilities of OOP. However, most laguages today contain OOP properties. JavaScript, for example, was standardized and the latest version of ECMAScript involves a new version where development work has been more concerned with removing logical inconsistencies than with improving the capability of the language to represent reality. Clearly, representation of reality will not be perfected if the languages we apply contain logical or operation inconsistencies, so this work is important.

Reality - What is it?

Reality can be equated with the observational view of truth. In this context, truth is what we detect or observe happening. This follows William James observation that, "Truth is what happens."Therefore, in terms of scientific investigation, what happens is often assessed in terms of a hypothesis, that is a view of why something happens in terms of the inter-relationship between determinant factors and outcomes. For example, a comparison of different levels of fertilizer application to a crop. Not only does the researcher measure the resulting production associated with different fertilizer inputs he can also, if there is sufficient comparative data, build up a quantitative view of the relationship between many levels of fertilizer input and many production levels in the form of a functional relationship or equation.

In the end, detected "responses" (production) to "events" (different fertilizer inputs) are "what happens"

In order to identify where the logic in scripts lack some required features or functions it is necessary to establish what information is necessary to measure responses and then assess if a script can faithfully describe that functional relationship, which would then enable us to build a working model of reality. The fundamental objective, when setting out to build a useful decision analysis model, is to identify the critical determinants of decision outcomes. These are identified through the application of assumed or known functional relationships between such determinants and outcomes. This then brings us to the need to define the methodologies used to collect the necessary information. Also, where we detect gaps in our ability to describe how data values inter-relate with other data sets, then we can identify gaps not only in our knowledge, but also in the script or programming language features and syntax.

In the statistical procedures developed by Fisher in the form of analysis of variance (ANOVA) we explicitly declare that there are two types of variance. One is explained variance that we can relate to determinant factor inputs to outcomes and the other is unexplained variance, where we do not have sufficient information of other determinants of the observed outcome.

Now, it is important to note that researchers and scientists are often satisfied with a truth that readily admits that it is part of the whole truth (explained variance + unexplained variance) and they are prepared to take decisions using the partial truth extracted from an experiment. The justification of this is that explained variance was statistically significant in terms of its coincidence with, or departure from, the "normal" or "existing" population curve. This “reference” distribution represents a dynamic equilibrium between observed outputs, or characteristics of interest, and the range of existing, but many unknown, determinants. The job of the investigator is to design experiments that isolate the effects of specific determinant factors of interest to assess the significant of these specific cause and effect relationships. In many cases, locational-state information helps complete the picture by identifying additional determinants, some of which are more significant than are often realised. Return to launch point in text

Footnote 9: Personal locational-state historyThe different life stages of a person are associated with differences in location at specific times and locations coinciding with those they meet and influence in one way or another, or are with, such as early days with family and later with acquaintances, teachers and others. What they witness in terms of events also depends upon their space-time location. Combining this series of events with the flow of information and knowledge each person is exposed to, or contributes to, ends up with a unique personal locational-state history.   In the advanced work at the ITTTF in Brussels, one of the spin-offs in 1985 was the Accumulog, an immutable database to record new data, information and knowledge as well as tacit knowledge in the dimension of human operational capabilities performance, on a cumulative basis similar to the blockchain concept announced a decade later but linked to cryptocurrency (Bitcoin) Return to launch point in text

Footnote 10: Reflection Reflection or a paused condition of wondering how something conveyed fits into an individual's constellation of knowledge usually requires some mental adjustment to slot the new information into the context of already known facts. If what is already understood does not comply with the new level of understanding, implied by new information, individuals pause to reflect and in the context of their current level of understanding attempt to rationalize the new information. When it does not "fit" into the individual's constellation of understood facts and relationships, the condition arrived at is one of not understanding what was conveyed. The cause can quite often be the fact that the person conveying the new information is using a terminology with which the listener is unacquainted and as a result the communication is serving to obscure the message rather than convey its full meaning to the listener. This aspect of communicating knowledge was referred to under the Perception-Semantic complex. Return to launch point in text

Footnote 11: Following the science?Taking into account the fact that many scientific investigations don't generate complete datasets because of a failure to apply locational-state principles, there are often grounds for extreme caution in attemtping to " follow the "science". A typical example is the mathematical formulation of the Quantity Theory of Money which is quite useless in predicting any relationship between money volumes and inflation. This is because there is no typology of destinations of money flows to explain the real impacts of money creation and therefore most critical variables do not feature in the QTM. Return to launch point in text

Footnote 12: Access to Dr. Richard Werner's analysis and propositionsDr. Werner maintains a website and blog which contains a clear account of this position with respect to the way monetarism is being applied today in the United Kingdom. We recounts the known issues recounted on this site such as declining investment and real incomes and excessive transfer of quantitative easing funds into assets. At the end of his blog he outlines practical propositions on the type of banking system required in the UK with an emphasis of local non-profit banks supporting SMEs. The model he outlines is one based on a the established and highly successful track record of support for SMEs in Germany including over 1,500 competitive export companies (SMEs). In a recent interview on Renegade Inc. there is a clear exposition of Werner's thesis economic on policy issues in the UK. Return to launch point in text

Footnote 13: Locational-state explicit and tacit knowledge

The first lecture I attended on agricultural economics at the School of Agriculture at Cambridge University happened to be the last lecture to be delivered by Ford Gibson Sturrock the Head of the Farm Economics Branch who was retiring. Aware we were all first year undergraduates he decided to pass on some of the lessons that might be useful to us. Economic dynamicsOne lesson was that because of the dynamics of the economy any of us who pursued agricultural economics was likely to come across a repetitive issue related to determining the optimum size of a farm. Because of input inflation and the slow response of wages to inflation, the terms of trade tended to work against agriculture, so with time real incomes would decline. It was therefore necessary to increase minimum farm sizes to maintain a reasonable compensatory income for a family. The planning for small holding aggregation into larger units needed to ensure that the farmers had an area of land to support himself and his family for at least 20 to 30 years, as a minimum condition. This would take into account the general information on annual rises in crop productivity which at that time was around 2% per annum. Sure enough this issue came up regularly on several assignments I subsequently worked on over the intervening 50 years, since that time. HeterogeneityOur courses included farm planning as well as agricultural policy. Farm planning involved visiting farms, some small and marginal farms as well as others that were large with a substantial turnover. We were required to come up with plans to increase their aggregate gross margins. Policy work was essentially about planning policies that considered the very large range of sizes of farms and types of farming. Therefore Hayek'a "Knowledge problem" was very apparent although I don't think any of us knew of Hayek'a position on this matter at the time. Policies were based on knowledge from national surveys of farms, their sizes, types of production systems and estimated gross margins. Following the war guaranteed minimum prices were designed to have a cut off point which helped smaller farms have an acceptable gross margin and in bad years there was at least a guaranteed minimum price. As a result, the level of self-sufficiency of the country in food rose substantially. Any particular issues related to Hayek's "Knowledge problem" were superseded by the main policy objective of increased self-sufficiency. Addressing the minimum needs of social constituentsFollowing the war foods were rationed and this helped moderate consumption. Food rationing ended in 1954. A review of research experiments and surveysFollowing the science?

In 1968 I needed to review agricultural production surveys and experimental results. I was surprised to find that most data collections took no account of the impacts of environmental and terrain characteristics on production. A typical experiment on the impact of fertilizer on production, for example, would limit data to different levels of fertilizer input and the yields obtained at each level of application. Seldom was any account taken of the rainfall, temperatures or soil texture. This I found to be odd because with the limited exposure I had received concerning ecology, these were important inputs. The states of these factors vary with geographic location (longitude, latitude and altitude) and time (chronological time (in the year concerned) and the age of crop) and therefore experimental or production survey results would also vary with the location and timing of the experiments or surveys. The variations in crop yields due to variations in temperature and water availability can exceed the variations due to fertilizer input. People just put this down to "the weather". As a result, correlation curves showing the relationship of fertilizer inputs to yield were the average figures with the variance being put down to "unexplained variance". In the context of Hayek's "Knowledge problem" the "scientific results" generated a conceptual and correct notion of the relationship between fertilizer and yields but this had no direct connection with the relationships at any given location. Therefore "following the science" was not a good idea because of the "knowledge problem".  Being somewhat surprised and dissatisfied with this state of affairs I developed a decision analysis model to project the interactions of soil fertility, water availability and temperatures in the production of biomass (crop yield) in order to determine if besides the fertilizer type experimentation it was possible to take into account the other factors. The result is shown on the right as a biomass production surface. Below in a 2D format production response curves in the conventional presentation. To face constraints having grown over smaller ranges of temperature and water availability.  Subsequent development Subsequent developmentIn 1985, while working at the ITTTF (Information Technology and Telecommunications Task Force) in Brussels, I headed a team and coordinated domain (sector) panels to identify potential new IT applications to support learning systems and operating over a global network. Understanding the pervasive nature of a global network and the fact that people would begin to rely almost exclusively on information supplied by others over the network, the question arose as to the reliability of information used to instruct, take commercial or policy decisions. The spectre of "fake information" became an issue of concern; today it appears frequently under the banner of "fake news". The question became, "How can a person needing to take a decision identify the information needed and to specify this as a request to those who can collect it and send it back? Then another question arises, "How can the recipient validate the data received?" Clearly, the first step is to identify data requirements and then specify these in a detailed fashion. The first step was a precise data identification and specification methodology. Recalling the agricultural survey and experimental data "knowledge problems" I added in the locational state elements. Although the original concepts related to agricultural production and natural ecosystems, it became evident that the same locational-state approach was required in any sector from building construction to the running of transport systems linking operational environmental conditions to standards of maintenance. The more precise records end up with a substantial locational-state content. As a result, what started out as a locational-state-based specification became a generalized approach as Locational-State Theory. Between 1983 and 1987 I was working specifically on the identification of IT applications at the ITTTF in Brussels. The core work was concerned with the identification of techniques to support life long learning using digital technology on a global network. Under consideration were search systems, AI and Global Village concepts, including the role of such systems in governance, policy design and decision analysis. Life long learning related to all life stages. For example medical services for prenatal women, schooling from infants to pre-higher education, higher education and the world of work as well as retirement.

However, there was an issue associated with all life stages and this was that the significance of the pervasive nature and potential beneficial power of global networks made it apparent that poor quality information and misrepresentations could undermine these benefits. Now, some 36 years later, this has surfaced in such topics as "Fake news", social media censorship, shadow banning and overall surveillance of the population.

In the world of business decision making involving valuable resources and transactions, we addressed the question, "How would a user of networked data be able to validate what is received? In short,"How could users develop a confidence with respect to the quality and reliability of information received in response to a request? This required an unambiguous information request and response cycle based on a system of clear data specification. In reviewing ways to specify data it became apparent that data specifications are location-bound. Thus, data is linked to the its location in geographic space as well as in time, when collected. The data values describe the state of those variables. For this reason I named the very first attempts to specify data on this basis as locational-state data. As can be appreciated locational-state data, by definition, is quite specific. Also data collected in another location and time would also be locational-state data but would likely be quite different from the other data collected in another location and time.

The question then arises, if data concerning a specific variable measuring some property of an object in different locations is different, to what degree can we explain why. Is there some aspect of locational-state approach which can help establish the likelihood of it being true and are there ways to reject it on the basis of it being unlikely to be true? The best way to explain this is to return to the complexity of the agricultural economy. Therefore Hayek's "Knowledge problem" can be seen to have some sense. Locational State Theory has become a broadly applicable analysis and is still work in progress. The locational state theory website can be accessed via the following link: Locational-State.

Return to launch point in text

All content on this site is subject to Copyright

All copyright is held by © Hector Wetherell McNeill (1975-2021) unless otherwise indicated

|

|

|

|

|

|